Nasa

Introduction

In the world of statistics and data science, nothing ever really gets done until you have some actual data to work with. Sometimes you can collect your own, sometimes you generate it yourself, but most of the time, you’re just gonna have to go out and get it. Chances are you’ll need to get your data from the internet, so you’ll need to familiarize yourself with the world of web scraping and APIs. Hopefully, you can gain some pointers as I show what I did to (technically) hack NASA.

Setting up your Data Scrape

There are three primary python packages that I use when scraping: Pandas, Requests, and Beautiful Soup. I won’t get into the detail of all of these, but you can find documentation below.

- Pandas

- Requests

- Beautiful Soup

</ul>

Two main ways to scrape web data are with an API, or via the website's html structure. In this post, I will use an API. There are a lot of awesome websites that distribute free APIs that can easily pull data from their website. You can find a good list, as well as more information on using APIs [here](https://rapidapi.com/collection/list-of-free-apis).

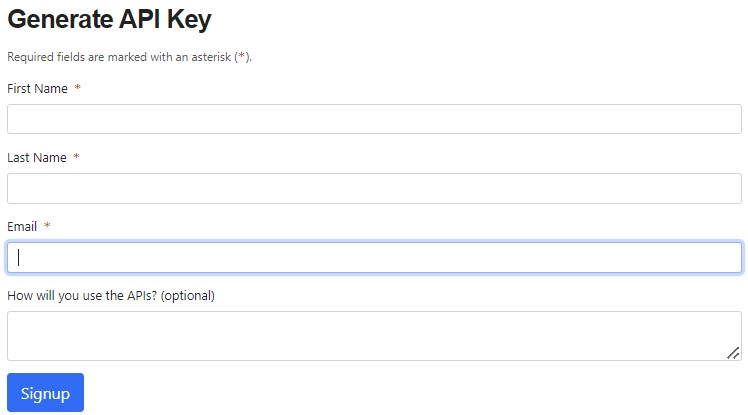

As mentioned before, I used a free API from NASA. They encourage people to use their data for app development and other purposes. Here is how you can get your own NASA API.

:warning: **Note:** Many websites do not offer their own APIs, so make sure that you are courteous and respectful as you scrape web data.

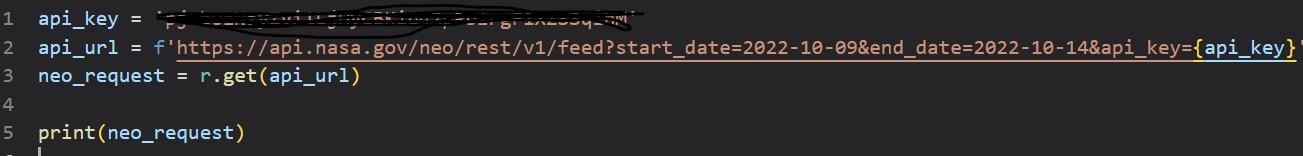

Below you can see how I used my API key and the requests package to bring in some data about astroids that have approached earth recently

#### Sorting Your Request Into Actual Data

Once you have performed a successful request, you have your data, but it isn't in the right format. It is basically a fancy json file that technically contains the information, but is hardly organized in a usable form. There is no one good way to pull this data into a pandas dataframe, but I like to start by saving the contents of request using something like this:

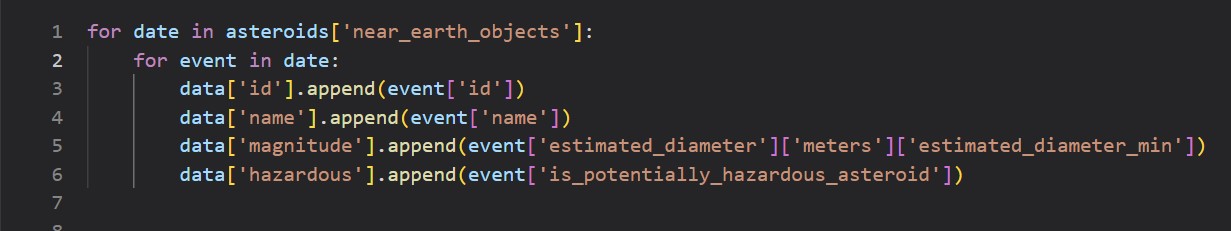

json_request = request.json() From there, use subsetting and for loops to pull data into your pandas dictionary. Here is an example of how I like to do it.  #### Conclusion I hope that this has been a good guide to show you some more about web scraping. Remember that every instance will be a little different, so it takes lots of good practice to gain confidence in this topic. Just keep trying new things and refer to documentation for help. You can see my complete code at [this link](https://github.com/parkcherrington/STAT386-Project2). If you have any questions or comments, please leave them below! Thanks for reading!